In the world of manufacturing, every product should be tested to some degree. Force measurement has been an integral part of testing in most industries, allowing quality control professionals to ensure that their products are up-to-par in reliability and compliant with the standards to which they were designed.

Strenuous, in-depth testing and inspection are traditionally required to assess quality and help prevent premature product failures from occurring in the field, which involves the collection and processing of a significant amount of data for accurate prevention analysis.

Artificial Intelligence, or “AI,” can be used to collect and analyze this data more efficiently for more streamlined quality control. This article will discuss AI and how it improves the analysis of data acquired from force measurement to prevent future product or production failures.

AI: In A Nutshell

So, what is AI? AI is what gives computer systems the ability to simulate the human train of thought and perform tasks that typically would require humans. Problem-solving, learning, pattern recognition, and decision-making are just some of the capabilities AI has to offer.

It is both unnerving and fascinating to think about – computers having human-like intelligence with the ability to think, perceive, infer, and adapt based on data that is given, all possible from a programmed algorithm.

Specialized programming, or algorithms, are implemented based on what is required by the system that it is integrated. Machine learning and deep learning are two common predictive AI algorithms or techniques that are used to process and interpret data. We’ll discuss these later in the article.

The Data – Where Force Meets AI

For optimizing AI integration with quality control, we first need to understand where all the data comes from in the first place: force measurement equipment.

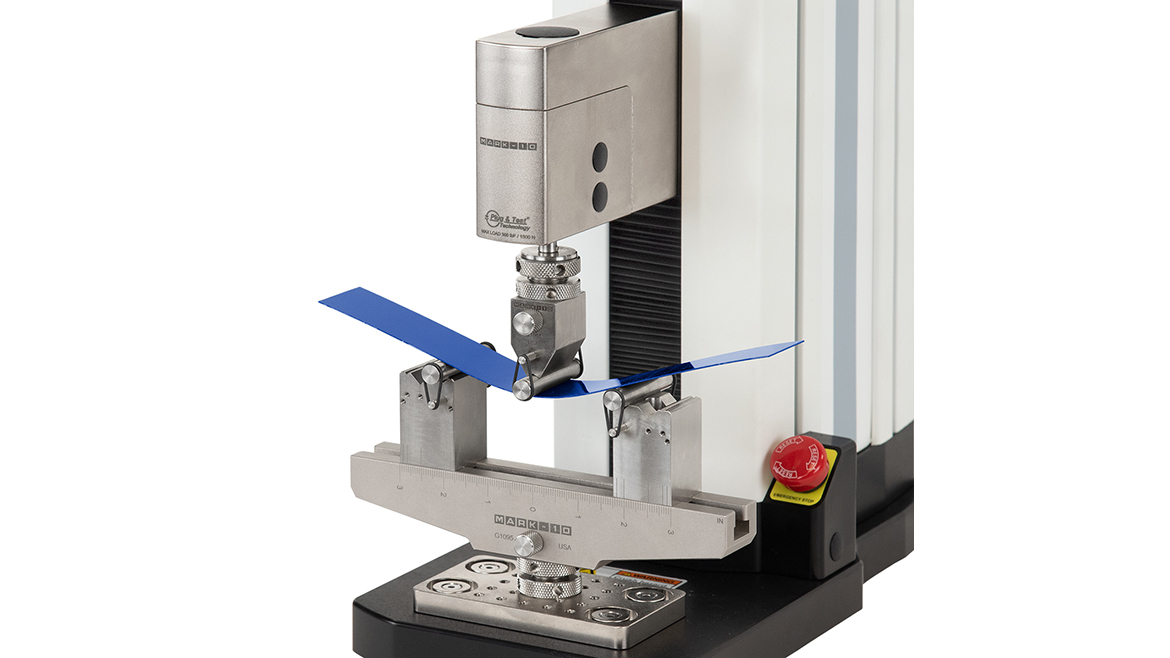

The driving force behind the evaluation of products through force measurement are force or materials testers, often referred to as UTMs or universal testing machines. UTMs are typically equipped with force sensors that utilize strain gage technology to acquire the raw force measurement readings.

These machines can acquire an abundance of data pertinent to product and material inspection, such as tensile strength, shear strength, and fatigue life. Data collected usually focuses on either the maximum or “peak” force, or the entire set of data for each test called the “raw” data. Depending on data collection rate, the size of a raw dataset can be very large, potentially containing tens of thousands of data points per test that will need to be analyzed.

Acquired data formats vary amongst manufacturers, but are commonly ASCII, CSV, XML, and binary; all text-based data that can be programmed and exported using AI systems.

However, not all data is perfect as it is collected and will require some adjustment within the measuring equipment before it is deemed useful. An example of imperfect data is “noise.”

Mechanical “noise,” or inconsistencies in the dataset due to environmental or mechanical vibrations destabilizing the force sensor’s measurements, could potentially skew the data incorrectly and lead to false testing outcomes. These artifacts are most noticeable on a graph where the plotted data points trend in jagged, non-linear patterns. Lower-capacity, high-sensitivity sensors are more prone to the effects of noise versus their higher-capacity, less-sensitive counterparts.

The good news is that many, if not all, force measurement systems have the ability to filter force measurement data points in real-time depending on the magnitude of noise.

One type of filter that force gages can apply involves a moving average technique that allows lower frequency signals to pass through (low-pass filter) while filtering higher frequency spikes over a segment or window of data. The average is then applied to the middle of each segment. Less noise requires a smaller window to be effective. Since the average moves from window to window instead of averaging the entire set, the trend of usable data remains intact and can be more useful than inconsistent, non-filtered data.

AI programming can be used to detect signal fluctuations and make changes to the filtering of the data inside of the test setup.

Long story short: The more accurate the data is, the more accurate the results, and the more useful the data will be for AI integration.

Force Data Analysis Before AI

NDT

Related Article

Statistical process control, or SPC, software has been a primary link between force measurement testing and the organization and analysis of data that uses statistics as a method to monitor and control a process. This makes it easier for operators to run a test and have the data directly sent to a software application which would store the measurements and generate graphs and charts based on historical data.

An objective for SPC integration is to determine pass/fail data values that either fall within or outside of designated parameters set by the testing process. In manufacturing, this leads to corrective action as needed in a production process to aid in the prevention of future product failures – based on what is already known.

When using AI to process the same data, the information can be fed directly into an algorithm specifically programmed to pick and choose the necessary data points and project future performance and potential failures based on the needs of the test. AI complements SPC software functionality in helping uncover trends and conclusions.

Which AI Algorithms Are Used To Process Force Measurement Data?

The two AI algorithms mentioned earlier in this article, machine learning and deep learning, are both very similar yet different subsets of predictive AI that have the ability to learn from input data, and can be used for force data analysis by recognizing specific patterns and trends.

Machine learning algorithms are used to detect patterns in the data and predict outcomes based on accessible data. These algorithms’ function based on feature engineering, or specific desired characteristic, selected by human programmers based on the input data for any given test.

Features such as peak and average forces, as well as advanced material properties testing results such as Young’s Modulus and yield strength, can all be selected manually as needed.

Machine learning algorithms tend to be more basic in architecture and tend to rely heavily on manual feature engineering and selection.

Deep learning algorithms are more advanced machine learning algorithms with a programming architecture like that of a neural network where multiple process layers are connected to each other, allowing for pattern recognition through the processing of tons of data.

This allows for the algorithm to automatically learn multiple levels of data representation, removing the need for manual feature engineering and enabling more advanced feature extraction.

Since humans learn and adapt their thought process and learn based on experience, the same goes for the neural network structure of AI. The more data that is available for the system to sift through, or gain “experience” from, the more effective the system will be – a necessity for effective preventative action against premature product failure.

For either form of AI to work best, a large amount of data, from many tests, will be needed for the most accurate predictions. Both the quantity and quality of the collected data are important for AI to be helpful.

To improve the quality, the data needs to go through a preprocessing phase in which the data is “cleaned.” Missing information or inconsistencies in the input data are corrected or smoothed out to improve quality of the input for the AI programming. If the data quality is poor, such as inaccurate measurements or results, the analysis may deem outlying testing samples that should have failed as passing, adding risk and incorrect data into the overall analysis. On the flipside, false negatives may hamper production and innovation.

This is where the data filtering methods mentioned previously in this article can be useful for AI. By smoothing the varying data and providing a more consistent dataset ahead of the analysis, AI can follow trends more accurately, which ultimately leads to more accurate quality predictions.

In Conclusion

Product failures, whether the fault is in the design, the materials used, or the manufacturing process, require high quality data acquired and analyzed as accurately as possible. Depending on the complexity of the data, the computing resources, and the budget for the integration, there are many solutions that can be used in quality control environments to aid in preventing failures – all benefitting from the use of force measurement.